Recently, for one of my customers, I had the chance to install a couples of Exadata X7-2 using the new Elastic Configuration. The major benefits of using Elastic Configuration consists in the possibility to acquire the Exadata Machine with almost any possible combination of Database Nodes and Storage Cells.

In the past we were used to standard Oracle pre-defined Exadata Machine configurations: Eighth Rack, Quarter Rack, Half Rack and Full Rack, which is still possible, but not flexible enough.

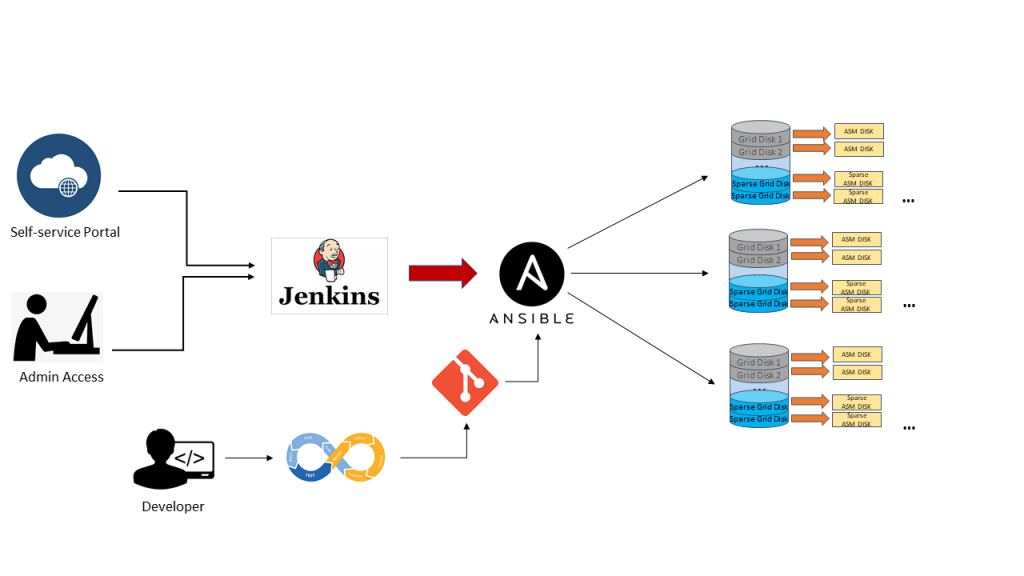

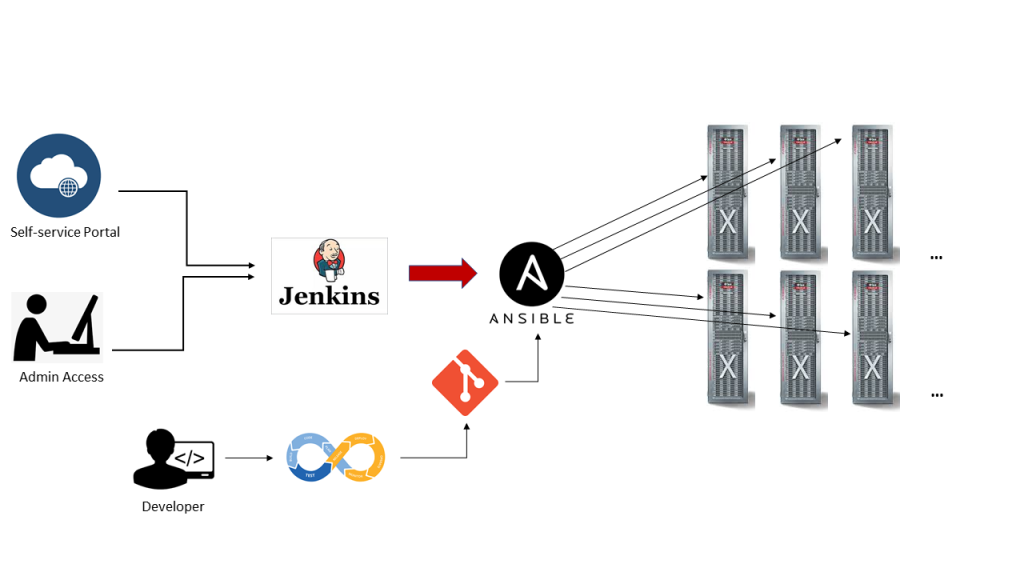

The pictures below highlight the differences between the two configurations:

source: Oracle Data Sheet Exadata Database Machine X7-2

Deployment Exadata Elactic Configuration

The elastic configuration process automates the initial IP address allocations to databasenodes and storage cells, regardless the ordered configuration. The Exadata Machine is connected to the InfiniBand switches using a standard cabling methodology which allows to determinate the node’s location in the rack. This information is therefore used when the nodes are powered up for the first time in order to assign the initial default IPs.

[root@exatest-iba0 ~]# ibhosts

Ca : 0x579b0123796ba0 ports 2 "node10 elasticNode 192.168.10.17,192.168.10.18 ETH0"

Ca : 0x579b01237966e0 ports 2 "node8 elasticNode 192.168.10.15,192.168.10.16 ETH0"

Ca : 0x579b0123844ab0 ports 2 "node6 elasticNode 192.168.10.11,192.168.10.12 ETH0"

Ca : 0x579b0123845e50 ports 2 "node5 elasticNode 192.168.10.7,192.168.10.8 ETH0"

Ca : 0x579b0123845fe0 ports 2 "node4 elasticNode 192.168.10.40,172.16.2.40 ETH0"

Ca : 0x579b0123845ea0 ports 2 "node3 elasticNode 192.168.10.9,192.168.10.10 ETH0"

Ca : 0x579b0123812b90 ports 2 "node2 elasticNode 192.168.10.1,192.168.10.2 ETH0"

Ca : 0x579b0123812970 ports 2 "node1 elasticNode 192.168.10.3,192.168.10.4 ETH0"

[root@exatest-iba0 ~]#

Because the Virtualization option was required, it has to be activated at this stage:

[root@node8 ~]# /opt/oracle.SupportTools/switch_to_ovm.sh

2019-03-07 01:05:22 -0800 [INFO] Switch to DOM0 system partition /dev/VGExaDb/LVDbSys3 (/dev/mapper/VGExaDb-LVDbSys3)

2019-03-07 01:05:22 -0800 [INFO] Active system device: /dev/mapper/VGExaDb-LVDbSys1

2019-03-07 01:05:22 -0800 [INFO] Active system device in boot area: /dev/mapper/VGExaDb-LVDbSys1

2019-03-07 01:05:23 -0800 [INFO] Set active system device to /dev/VGExaDb/LVDbSys3 in /boot/I_am_hd_boot

2019-03-07 01:05:23 -0800 [INFO] Creating /.elasticConfig on DOM0 boot partition /boot

2019-03-07 01:05:34 -0800 [INFO] Reboot has been initiated to switch to the DOM0 system partition

Connection to 192.168.1.8 closed by remote host.

Connection to 192.168.1.8 closed.

✘

After the switch to OVM command it is time to reclaim the space initially used by the Linux bare metal Logical Volumes:

[root@node8 ~]# /opt/oracle.SupportTools/reclaimdisks.sh -free -reclaim

Model is ORACLE SERVER X7-2

Number of LSI controllers: 1

Physical disks found: 4 (252:0 252:1 252:2 252:3)

Logical drives found: 1

Linux logical drive: 0

RAID Level for the Linux logical drive: 5

Physical disks in the Linux logical drive: 4 (252:0 252:1 252:2 252:3)

Dedicated Hot Spares for the Linux logical drive: 0

Global Hot Spares: 0

[INFO ] Check for DOM0 with inactive Linux system disk

[INFO ] Valid DOM0 with inactive Linux system disk is detected

[INFO ] Number of partitions on the system device /dev/sda: 3

[INFO ] Higher partition number on the system device /dev/sda: 3

[INFO ] Last sector on the system device /dev/sda: 3509760000

[INFO ] End sector of the last partition on the system device /dev/sda: 3509759966

[INFO ] Remove inactive system logical volume /dev/VGExaDb/LVDbSys1

[INFO ] Remove logical volume /dev/VGExaDb/LVDbOra1

[INFO ] Extend logical volume /dev/VGExaDb/LVDbExaVMImages

[INFO ] Resize ocfs2 on logical volume /dev/VGExaDb/LVDbExaVMImages

[INFO ] XEN boot version and rpm versions are in sync

[INFO ] XEN EFI files will not be updated

[INFO ] Force setup grub

[root@node8 ~]#

Check the success of the reclaim disks procedure:

[root@node8 ~]# /opt/oracle.SupportTools/reclaimdisks.sh -check

Model is ORACLE SERVER X7-2

Number of LSI controllers: 1

Physical disks found: 4 (252:0 252:1 252:2 252:3)

Logical drives found: 1

Linux logical drive: 0

RAID Level for the Linux logical drive: 5

Physical disks in the Linux logical drive: 4 (252:0 252:1 252:2 252:3)

Dedicated Hot Spares for the Linux logical drive: 0

Global Hot Spares: 0

Valid. Disks configuration: RAID5 from 4 disks with no global and dedicated hot spare disks.

Valid. Booted: DOM0. Layout: DOM0.

[root@node8 ~]#

Upload the Oracle Exadata Database Machine Deployment Assistant configuration files to the database server, together with all software images, and run the One command procedure.

List of all Steps

[root@exatestdbadm01 linux-x64]# ./install.sh -cf TVD-exatest.xml -l

Initializing

1. Validate Configuration File

2. Update Nodes for Eighth Rack

3. Create Virtual Machine

4. Create Users

5. Setup Cell Connectivity

6. Calibrate Cells

7. Create Cell Disks

8. Create Grid Disks

9. Install Cluster Software

10. Initialize Cluster Software

11. Install Database Software

12. Relink Database with RDS

13. Create ASM Diskgroups

14. Create Databases

15. Apply Security Fixes

16. Install Exachk

17. Create Installation Summary

18. Resecure Machine

[root@exatestdbadm01 linux-x64]#

Run Step One to validate the setup

This example includes the creation of three different Clusters.

[root@exatestdbadm01 linux-x64]# ./install.sh -cf TVD-exatest.xml -s 1

Initializing

Executing Validate Configuration File

Validating cluster: Cluster-EFU

Locating machines...

Verifying operating systems...

Validating cluster networks...

Validating network connectivity...

Validating private ips on virtual cluster

Validating NTP setup...

Validating physical disks on storage cells...

Validating users...

Validating cluster: Cluster-PR1

Locating machines...

Verifying operating systems...

Validating cluster networks...

Validating network connectivity...

Validating private ips on virtual cluster

Validating NTP setup...

Validating physical disks on storage cells...

Validating users...

Validating cluster: Cluster-VAL

Locating machines...

Verifying operating systems...

Validating cluster networks...

Validating network connectivity...

Validating private ips on virtual cluster

Validating NTP setup...

Validating physical disks on storage cells...

Validating users...

Validating platinum...

Validating switches...

Checking disk reclaim status...

Checking Disk Tests Status....

Completed validation...

SUCCESS: Ip address: 10.x8.xx.40 is configured correctly

SUCCESS: Ip address: 10.x9.xx.55 is configured correctly

SUCCESS: Ip address: 10.x8.xx.41 is configured correctly

SUCCESS: Ip address: 10.x9.xx.56 is configured correctly

SUCCESS: Ip address: 10.x8.xx.45 is configured correctly

SUCCESS: Ip address: 10.x8.xx.46 is configured correctly

SUCCESS: Ip address: 10.x8.xx.44 is configured correctly

SUCCESS: Ip address: 10.x8.xx.43 is configured correctly

SUCCESS: Ip address: 10.x8.xx.42 is configured correctly

SUCCESS: 10.x8.xx.40 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x9.xx.55 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.41 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x9.xx.56 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.45 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.46 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.44 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.43 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.42 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.40 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x9.xx.55 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.41 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x9.xx.56 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.45 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.46 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.44 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.43 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.42 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.40 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x9.xx.55 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.41 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x9.xx.56 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.45 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.46 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.44 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.43 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.42 configured correctly on exatestceladm03.my.domain.com

SUCCESS: Ip address: 10.x8.xx.47 is configured correctly

SUCCESS: Ip address: 10.x9.xx.57 is configured correctly

SUCCESS: Ip address: 10.x8.xx.48 is configured correctly

SUCCESS: Ip address: 10.x9.xx.58 is configured correctly

SUCCESS: Ip address: 10.x8.xx.52 is configured correctly

SUCCESS: Ip address: 10.x8.xx.51 is configured correctly

SUCCESS: Ip address: 10.x8.xx.53 is configured correctly

SUCCESS: Ip address: 10.x8.xx.50 is configured correctly

SUCCESS: Ip address: 10.x8.xx.49 is configured correctly

SUCCESS: 10.x8.xx.47 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x9.xx.57 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.48 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x9.xx.58 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.52 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.51 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.53 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.50 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.49 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.47 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x9.xx.57 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.48 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x9.xx.58 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.52 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.51 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.53 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.50 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.49 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.47 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x9.xx.57 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.48 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x9.xx.58 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.52 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.51 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.53 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.50 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.49 configured correctly on exatestceladm03.my.domain.com

SUCCESS: Ip address: 10.x8.xx.54 is configured correctly

SUCCESS: Ip address: 10.x9.xx.59 is configured correctly

SUCCESS: Ip address: 10.x8.xx.55 is configured correctly

SUCCESS: Ip address: 10.x9.xx.60 is configured correctly

SUCCESS: Ip address: 10.x8.xx.58 is configured correctly

SUCCESS: Ip address: 10.x8.xx.60 is configured correctly

SUCCESS: Ip address: 10.x8.xx.59 is configured correctly

SUCCESS: Ip address: 10.x8.xx.57 is configured correctly

SUCCESS: Ip address: 10.x8.xx.56 is configured correctly

SUCCESS: 10.x8.xx.54 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x9.xx.59 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.55 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x9.xx.60 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.58 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.60 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.59 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.57 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.56 configured correctly on exatestceladm01.my.domain.com

SUCCESS: 10.x8.xx.54 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x9.xx.59 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.55 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x9.xx.60 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.58 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.60 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.59 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.57 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.56 configured correctly on exatestceladm02.my.domain.com

SUCCESS: 10.x8.xx.54 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x9.xx.59 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.55 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x9.xx.60 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.58 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.60 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.59 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.57 configured correctly on exatestceladm03.my.domain.com

SUCCESS: 10.x8.xx.56 configured correctly on exatestceladm03.my.domain.com

SUCCESS: Validated NTP server 10.x3.xx.xx0

SUCCESS: Validated NTP server 10.x3.xx.xx1

SUCCESS: Required file /EXAVMIMAGES/onecommand/linux-x64/WorkDir/p28514222_122118_Linux-x86-64.zip exists...

SUCCESS: Required file /EXAVMIMAGES/onecommand/linux-x64/WorkDir/p28762988_12201181016GIOCT2018RU_Linux-x86-64.zip exists...

SUCCESS: Required file /EXAVMIMAGES/onecommand/linux-x64/WorkDir/p28762989_12201181016DBOCT2018RU_Linux-x86-64.zip exists...

SUCCESS: Required file config/exachk.zip exists...

SUCCESS: Found Operating system LinuxPhysical and configuration file expects LinuxPhysical on machine exatestceladm03.my.domain.com, machine type: storage

SUCCESS: Found Operating system LinuxPhysical and configuration file expects LinuxPhysical on machine exatestceladm02.my.domain.com, machine type: storage

SUCCESS: Found Operating system LinuxPhysical and configuration file expects LinuxPhysical on machine exatestceladm01.my.domain.com, machine type: storage

SUCCESS: Expected machine exatestdbadm01.my.domain.com to have OS Type of Linux Dom0, and found OsType LinuxDom0

SUCCESS: Expected machine exatestdbadm02.my.domain.com to have OS Type of Linux Dom0, and found OsType LinuxDom0

SUCCESS: NTP servers on machine exatestceladm02.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestceladm01.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestceladm03.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestdbadm01.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestdbadm02.my.domain.com verified successfully

SUCCESS: Sufficient memory for all the guests on database node exatestdbadm02.my.domain.com

SUCCESS: Sufficient memory for all the guests on database node exatestdbadm01.my.domain.com

SUCCESS: Expected machine exatestdbadm02.my.domain.com to have OS Type of Linux Dom0, and found OsType LinuxDom0

SUCCESS: Found Operating system LinuxPhysical and configuration file expects LinuxPhysical on machine exatestceladm01.my.domain.com, machine type: storage

SUCCESS: Found Operating system LinuxPhysical and configuration file expects LinuxPhysical on machine exatestceladm02.my.domain.com, machine type: storage

SUCCESS: Expected machine exatestdbadm01.my.domain.com to have OS Type of Linux Dom0, and found OsType LinuxDom0

SUCCESS: Found Operating system LinuxPhysical and configuration file expects LinuxPhysical on machine exatestceladm03.my.domain.com, machine type: storage

SUCCESS: NTP servers on machine exatestceladm03.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestceladm01.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestceladm02.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestdbadm02.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestdbadm01.my.domain.com verified successfully

SUCCESS: Sufficient memory for all the guests on database node exatestdbadm02.my.domain.com

SUCCESS: Sufficient memory for all the guests on database node exatestdbadm01.my.domain.com

SUCCESS: Found Operating system LinuxPhysical and configuration file expects LinuxPhysical on machine exatestceladm03.my.domain.com, machine type: storage

SUCCESS: Found Operating system LinuxPhysical and configuration file expects LinuxPhysical on machine exatestceladm02.my.domain.com, machine type: storage

SUCCESS: Found Operating system LinuxPhysical and configuration file expects LinuxPhysical on machine exatestceladm01.my.domain.com, machine type: storage

SUCCESS: Expected machine exatestdbadm02.my.domain.com to have OS Type of Linux Dom0, and found OsType LinuxDom0

SUCCESS: Expected machine exatestdbadm01.my.domain.com to have OS Type of Linux Dom0, and found OsType LinuxDom0

SUCCESS: NTP servers on machine exatestceladm03.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestceladm02.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestceladm01.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestdbadm01.my.domain.com verified successfully

SUCCESS: NTP servers on machine exatestdbadm02.my.domain.com verified successfully

SUCCESS: Sufficient memory for all the guests on database node exatestdbadm02.my.domain.com

SUCCESS: Sufficient memory for all the guests on database node exatestdbadm01.my.domain.com

SUCCESS: Switch IP 10.x9.xx.51 resolves successfully to host exatest-iba0.my.domain.com on node exatestceladm03.my.domain.com

SUCCESS:

SUCCESS: Switch IP 10.x9.xx.51 resolves successfully to host exatest-iba0.my.domain.com on node exatestceladm02.my.domain.com

SUCCESS: Switch IP 10.x9.xx.52 resolves successfully to host exatest-ibb0.my.domain.com on node exatestceladm03.my.domain.com

SUCCESS:

SUCCESS:

SUCCESS:

SUCCESS: Switch IP 10.x9.xx.52 resolves successfully to host exatest-ibb0.my.domain.com on node exatestceladm02.my.domain.com

SUCCESS:

SUCCESS: Switch IP 10.x9.xx.51 resolves successfully to host exatest-iba0.my.domain.com on node exatestceladm01.my.domain.com

SUCCESS: Switch IP 10.x9.xx.52 resolves successfully to host exatest-ibb0.my.domain.com on node exatestceladm01.my.domain.com

SUCCESS:

SUCCESS: X7 compute node exatestdbadm01.my.domain.com has updated Broadcom firmware

SUCCESS: X7 compute node exatestdbadm02.my.domain.com has updated Broadcom firmware

SUCCESS: Disk Tests are not running/active on any of the Storage Servers.

SUCCESS: Cluster Version 12.2.0.1.181016 is compatible with OL7 on exatestdbadm01

SUCCESS: Cluster Version 12.2.0.1.181016 is compatible with OL7 on exatestdbadm02

SUCCESS: Cluster Version 12.2.0.1.181016 is compatible with OL7 on exatestdbadm01

SUCCESS: Cluster Version 12.2.0.1.181016 is compatible with OL7 on exatestdbadm02

SUCCESS: Cluster Version 12.2.0.1.181016 is compatible with OL7 on exatestdbadm01

SUCCESS: Cluster Version 12.2.0.1.181016 is compatible with OL7 on exatestdbadm02

SUCCESS: Disk size 10000GB on cell exatestceladm01.my.domain.com matches the value specified in the OEDA configuration file

SUCCESS: Disk size 10000GB on cell exatestceladm02.my.domain.com matches the value specified in the OEDA configuration file

SUCCESS: Disk size 10000GB on cell exatestceladm03.my.domain.com matches the value specified in the OEDA configuration file

SUCCESS: Disk size 10000GB on cell exatestceladm04.my.domain.com matches the value specified in the OEDA configuration file

SUCCESS: Disk size 10000GB on cell exatestceladm05.my.domain.com matches the value specified in the OEDA configuration file

SUCCESS: Disk size 10000GB on cell exatestceladm06.my.domain.com matches the value specified in the OEDA configuration file

Successfully completed execution of step Validate Configuration File [elapsed Time [Elapsed = 250301 mS [4.0 minutes] Thu Mar 07 12:35:31 CET 2019]]

[root@exatestdbadm01 linux-x64]#

Execution of all remaining steps

Than, because we felt confident, we decide to invoke all remaining steps together:

root@exatestdbadm01 linux-x64]# ./install.sh -cf TVD-exatest.xml -r 1-18

...

..

The final result is the Exadata Machine installed with six Oracle VMs, and three Grid Infrastructure clusters each one running a test RAC database.